GroundCUA

GroundCUA

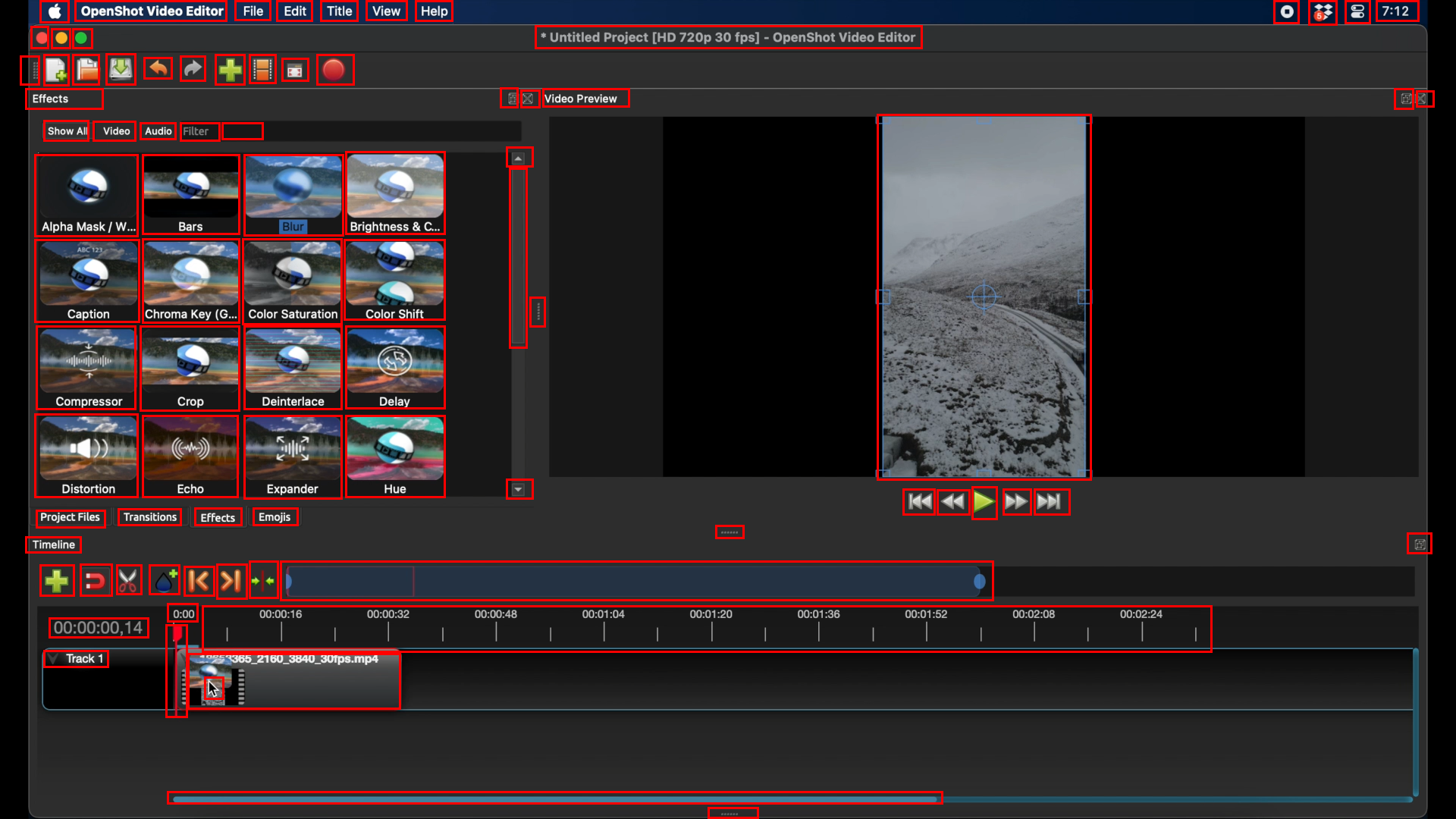

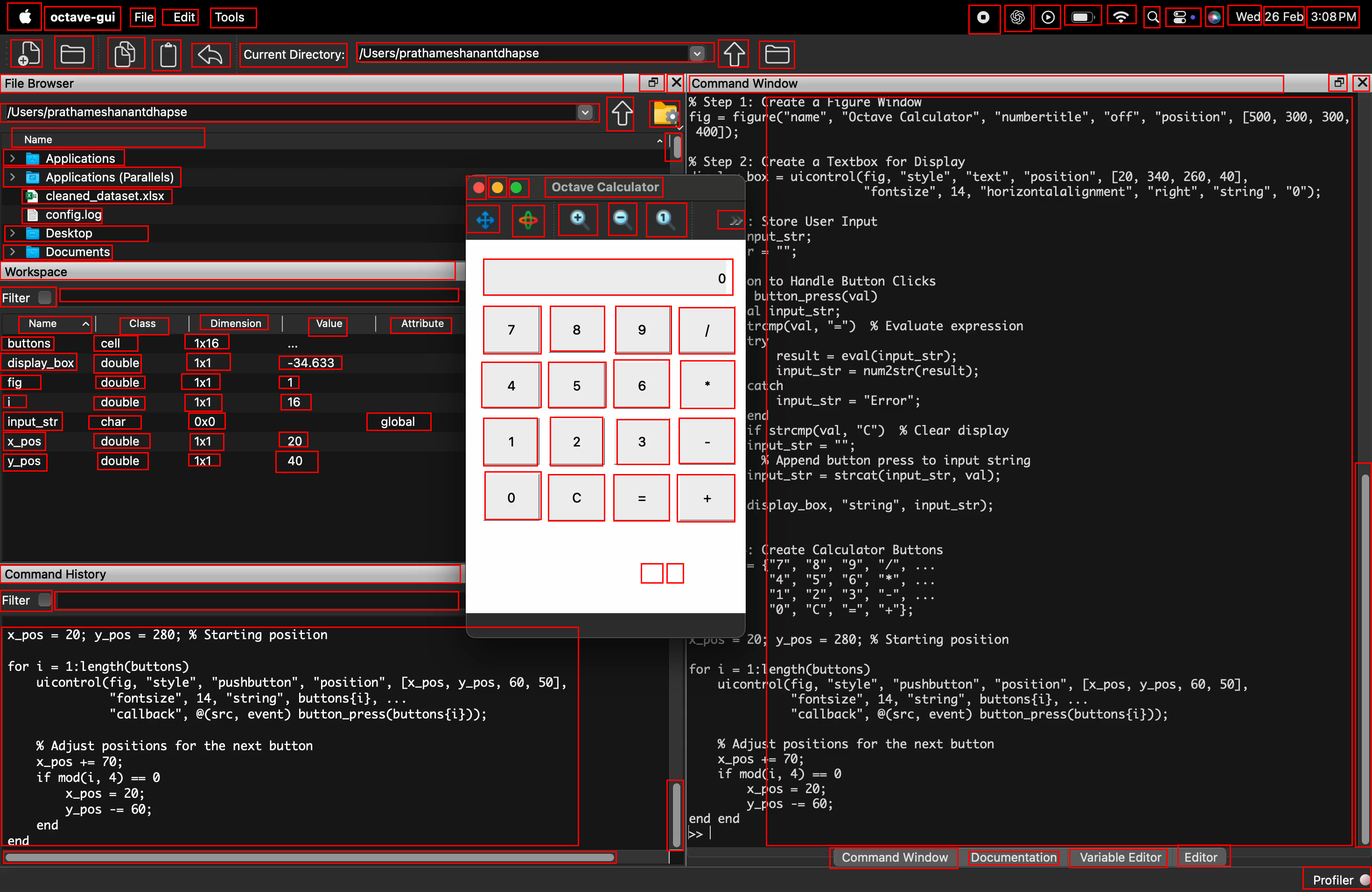

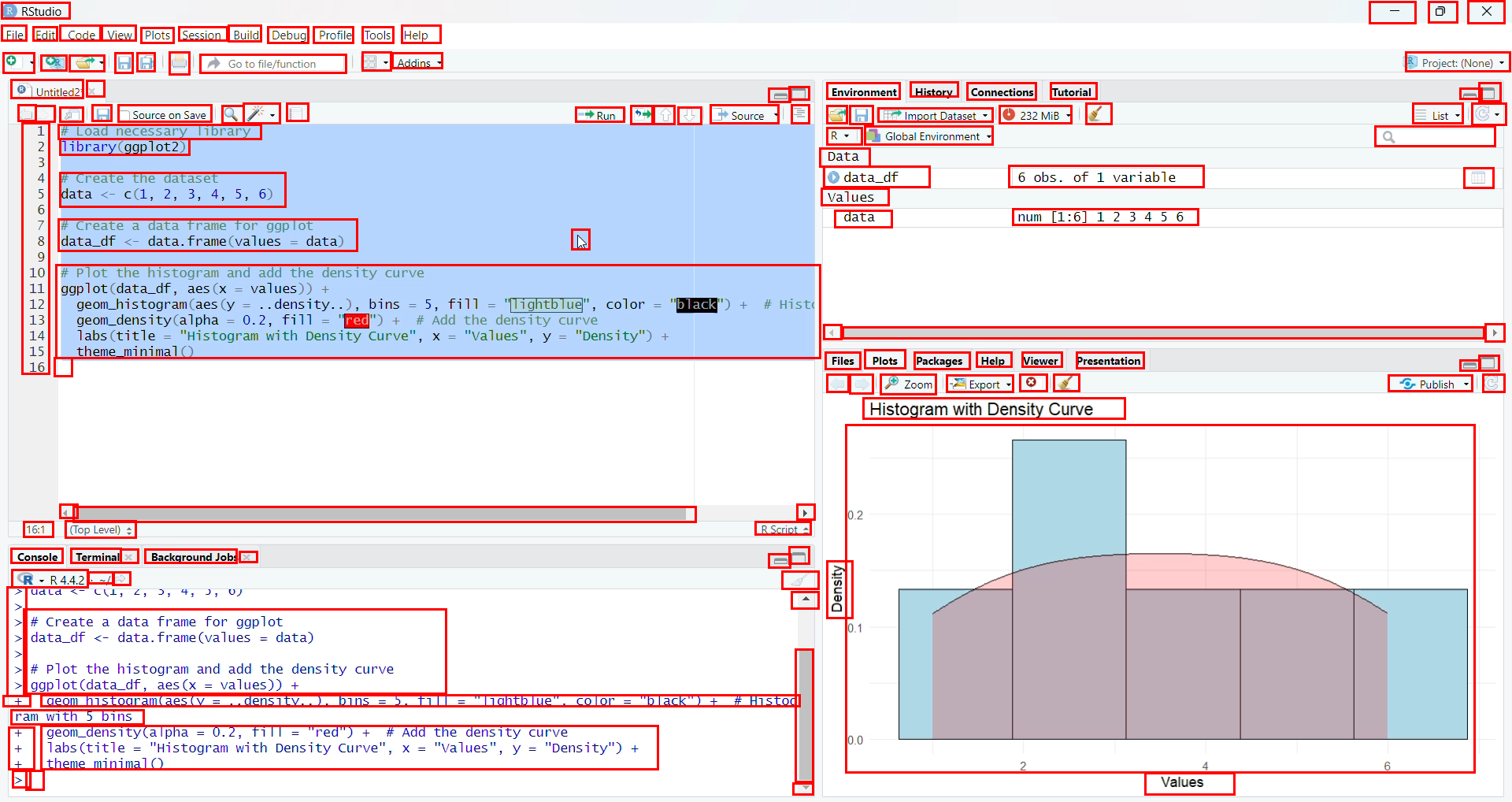

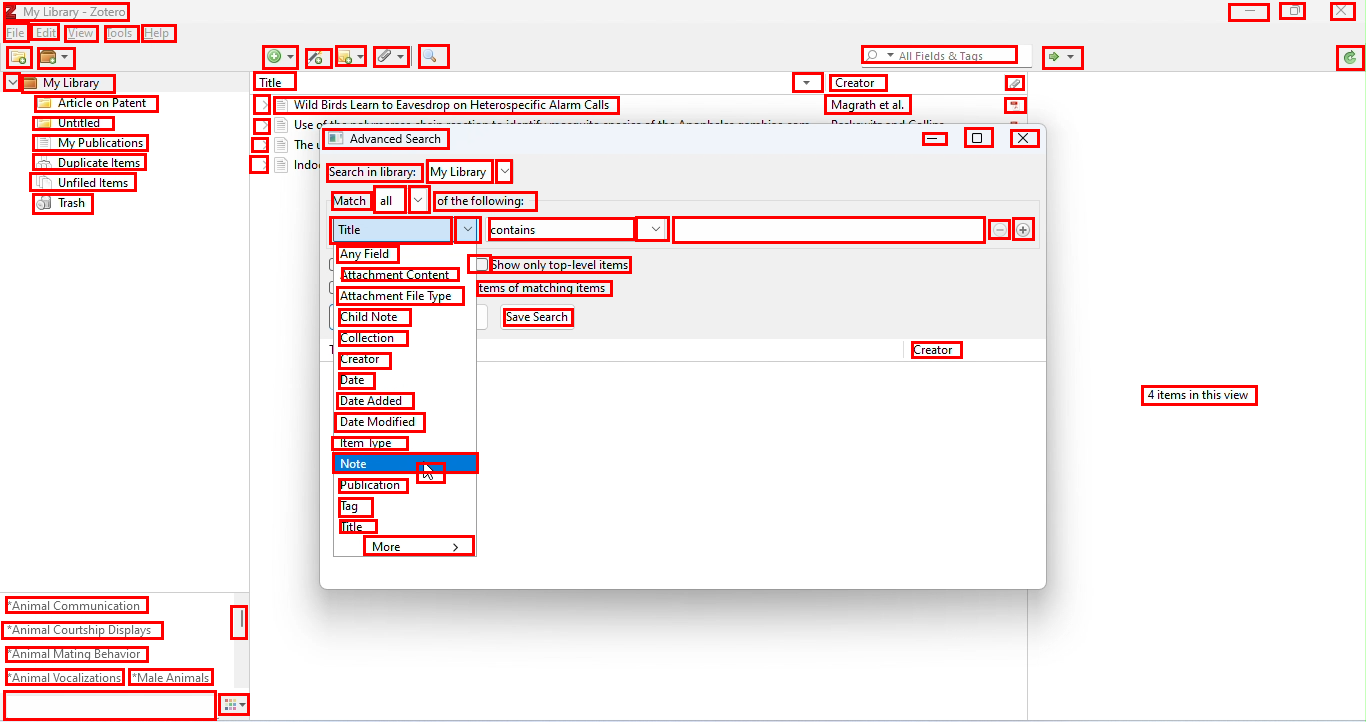

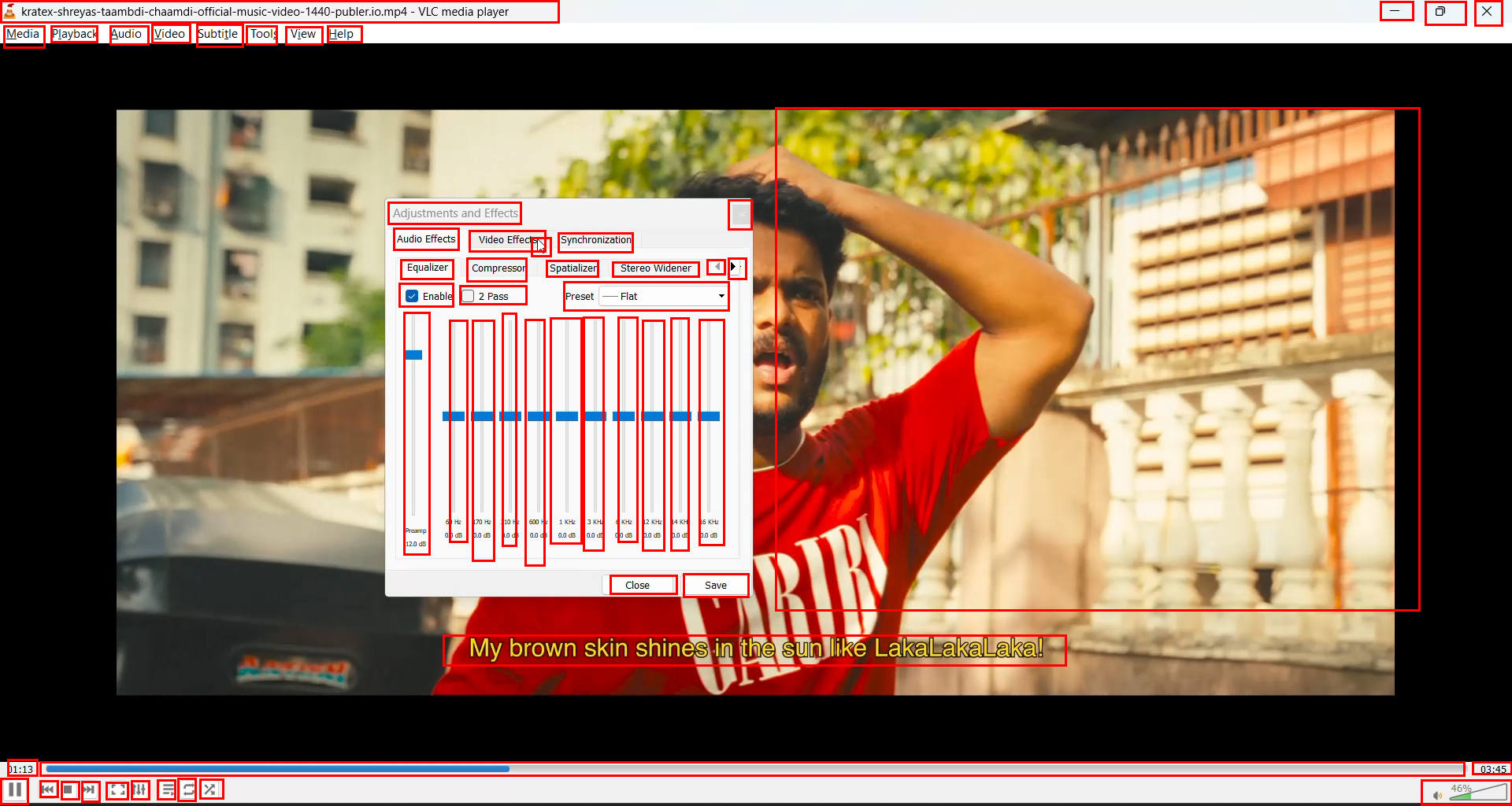

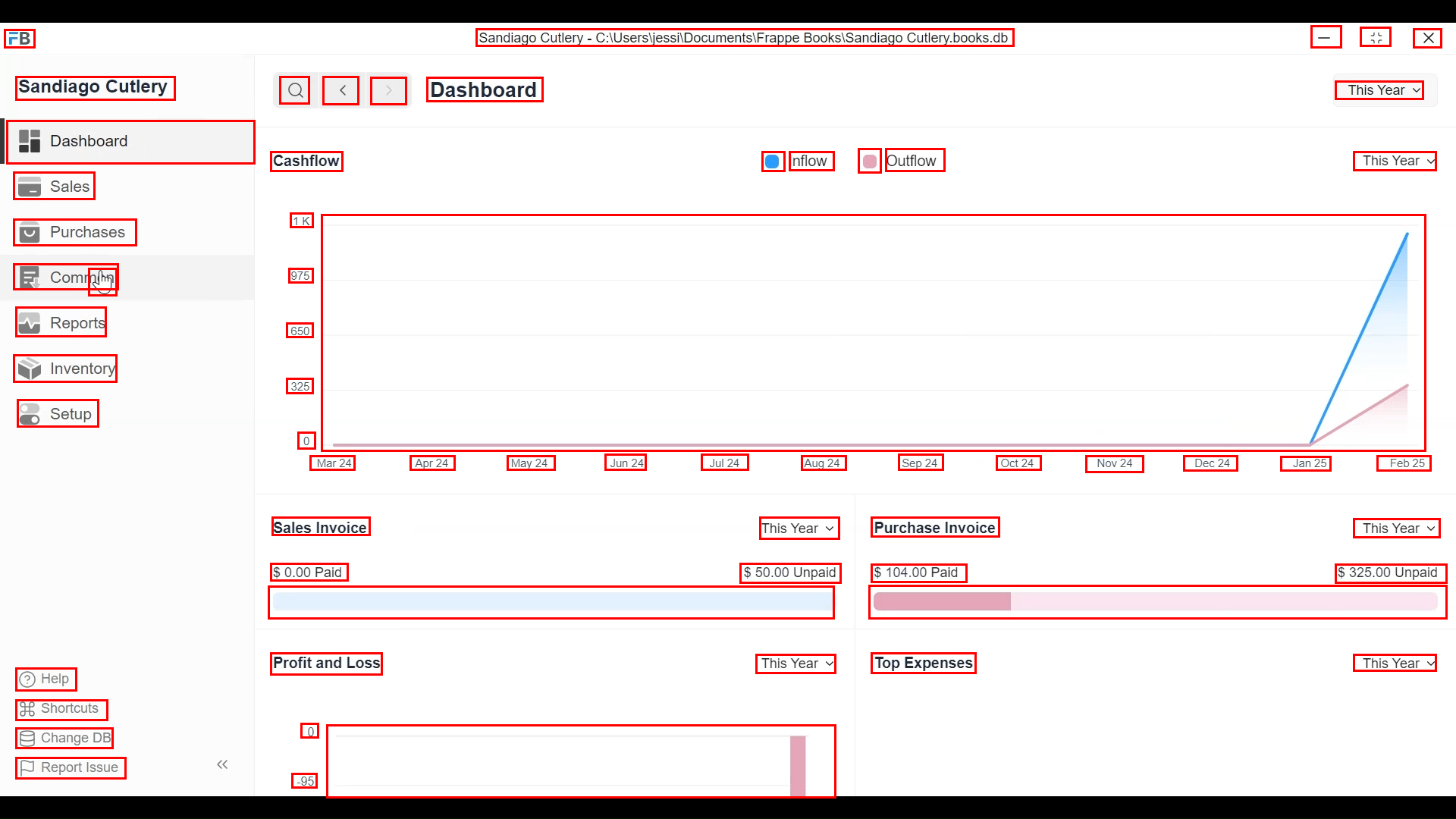

Grounding Computer Use Agents

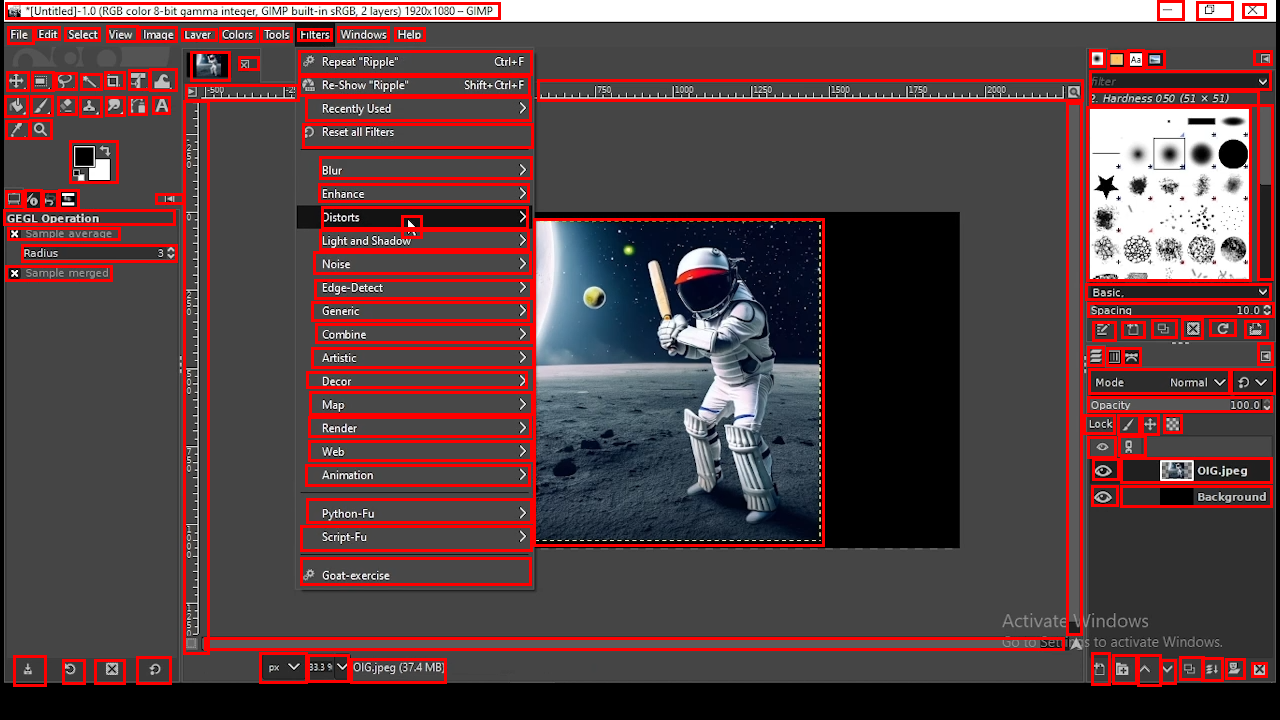

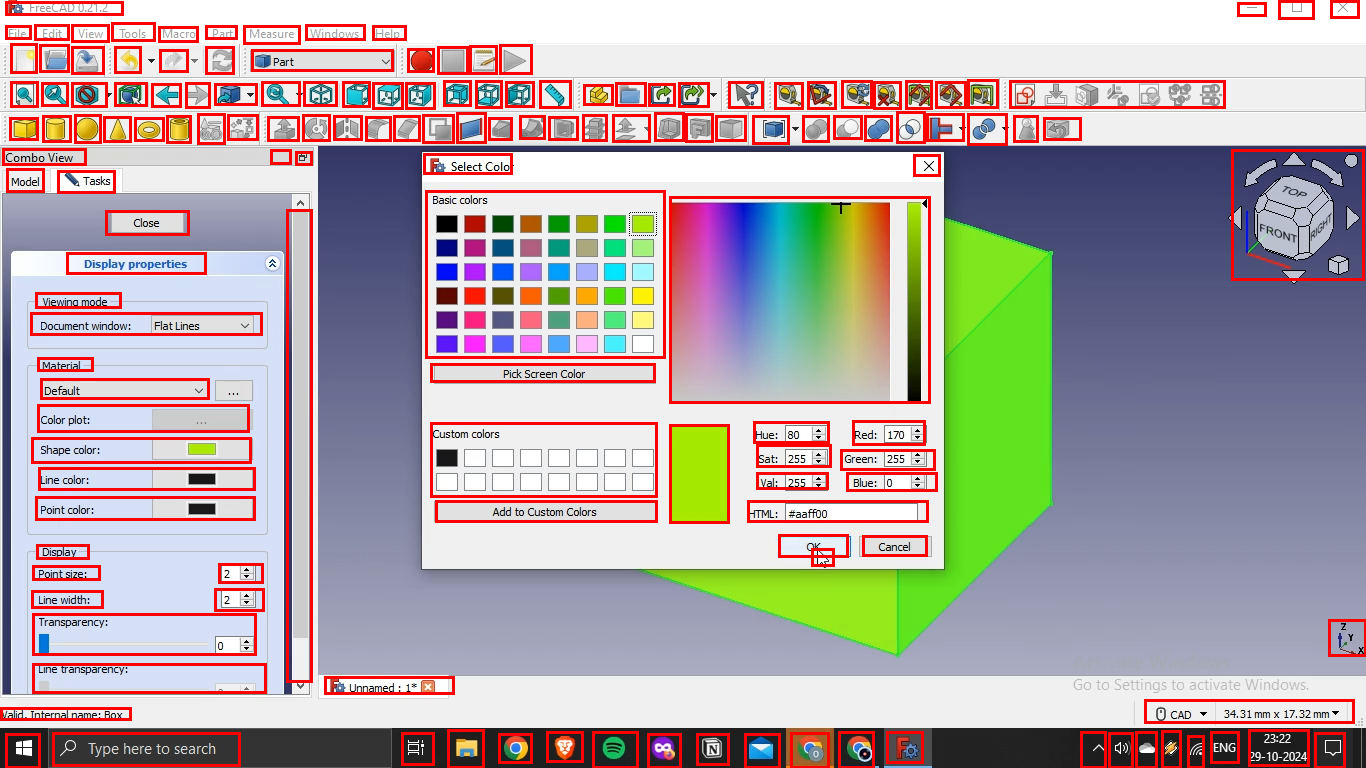

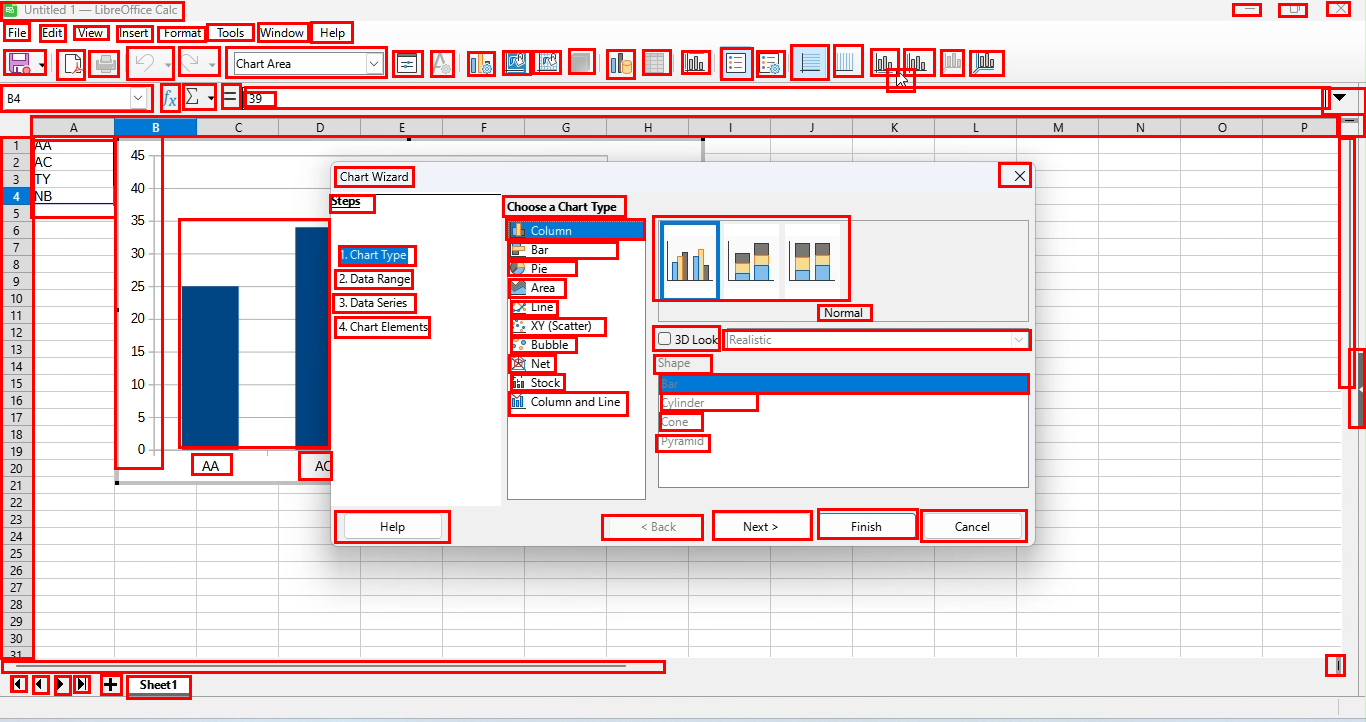

on Human Demonstrations

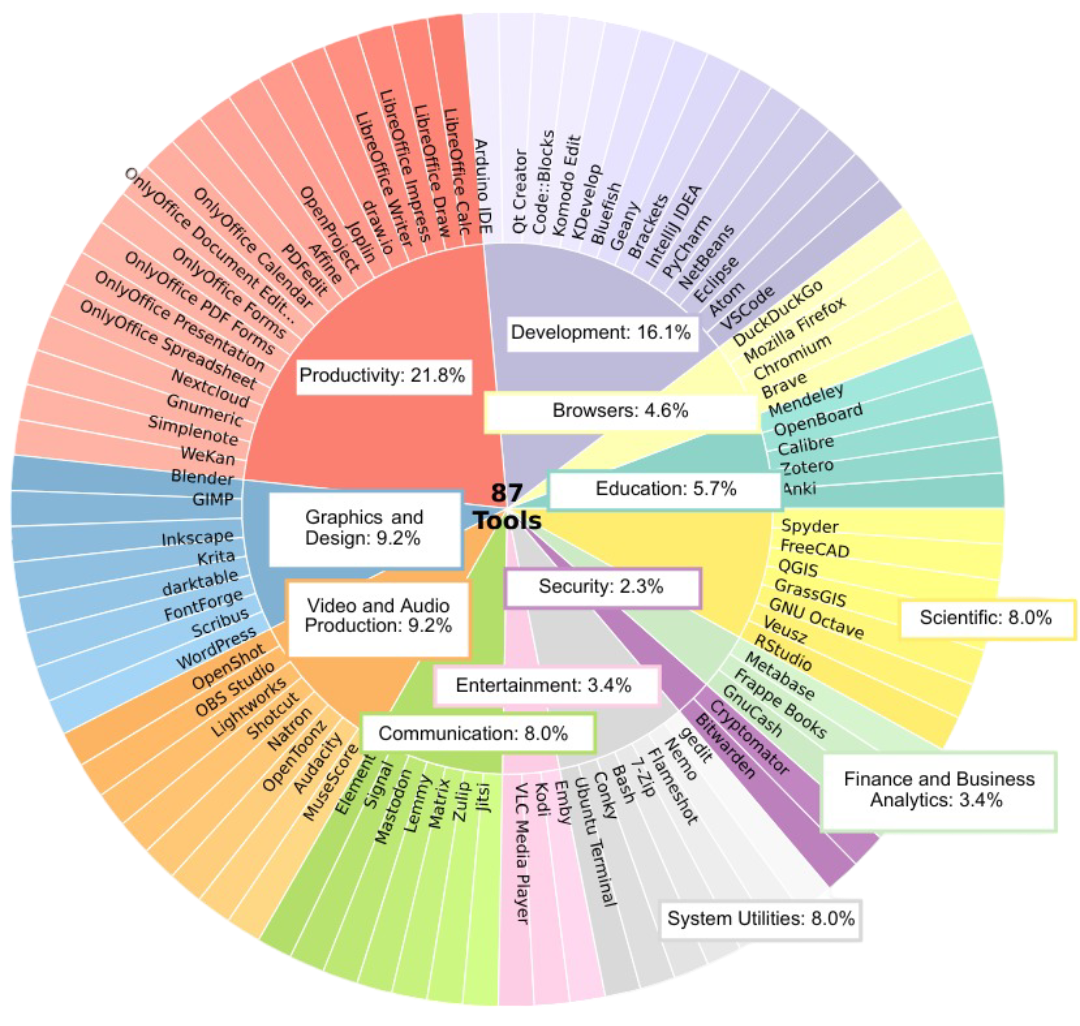

Achieving SOTA desktop grounding with 700K samples vs 9M+ in prior work

Dense supervision • Expert annotations • Cross-platform generalization